This post was inspired by an amazing workshop given by Mark Burgman at the recent Student Conference on Conservation Science in Cambridge. I have done my best to get across what I learnt from it here, but it is not the final word on this issue.

It turns out experts aren’t necessarily all that good at estimation. They are often wrong and overconfident in their ability to get stuff right. This matters. A lot.

It matters because experts, particularly scientists, are often asked to predict something based on their knowledge of a subject. These predictions can be used to inform policy or other responses. The consequences of bad predictions can be dramatic.

For example, seismologists in L’Aquila, Italy, were asked whether there was a risk of threat to human life from earthquakes in the area by the media. They famously told reporters there was ‘no danger.’ They were wrong.

Not all cases are so dramatic, but apparently experts make these mistakes all the time. This has profound implications for conservation.

Expert opinion is used all the time in ecology and conservation where empirical data is hard or impossible to collect. For example the well known IUCN redlist draws on large pools of expert knowledge in determining range and population sizes for species. If these are very inaccurate then we have a problem.

Fortunately, there may be a solution.

This solution was first noticed in 1906 at a country fair. At this fair people were taking part in a contest to guess the weight of a prize ox. Of the 800 or so people that took part nobody got the correct weight. However, the average guess was closer than most people in the crowd and most of the cattle experts. As a group these non-experts out performed the experts.

Apparently this is now a phenomena that is widely recognised.

Building on this a technique has been developed called the delphi method. It aims to improve peoples estimates by getting them to make an estimate, discuss it with other people in their assigned group and then make another estimate. You then take the mean estimate of the group.

Mark Burgman and colleagues have come up with a modified version of the technique. This involves people estimating something, giving the highest reasonable value for their estimate, their lowest reasonable value and a measure of their confidence (50-100%) that their limits contain the true value. Then you discuss them in your group and change you estimates and use these to derive a group mean. This can be done many times, and it seems estimates are better with more iterations.

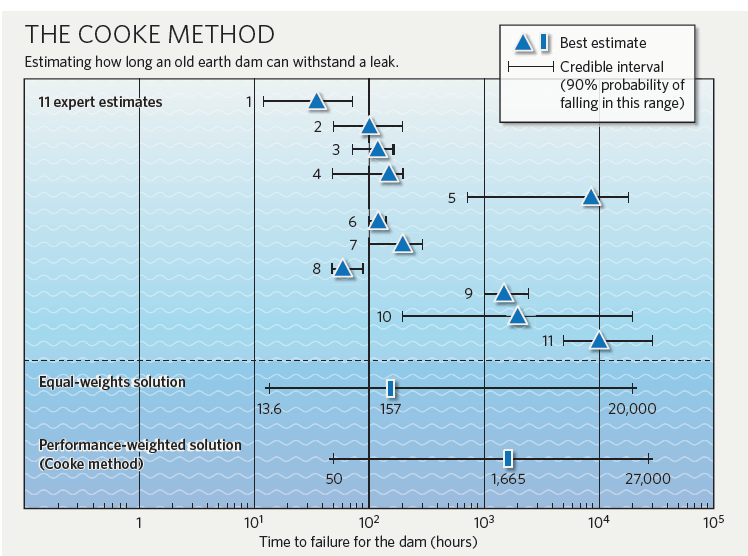

I think this is a great idea. But you can take the idea even further. You can do this with a series of questions some of which you know the answer for. Using respondents answers to these questions you can calibrate how expert your experts actually are. Then you can weight people’s estimates based on the confidence you have in them, like in the example below.

This is an idea pretty similar to meta-analysis. We give more weight to the estimates we are more confident about.

These approaches have been around for a while and appear to have been used very rarely in ecology and conservation. Given how often expert opinion is used in conservation it is important we think hard about how reliable it actually is. It will never be perfect, but it can be better. This work is a step in the right direction.

Nice post! I also attended the first workshop by Mark Burgman last week and I was equally impressed.

I’ve been thinking about these types of analyses over the past few days and was wondering whether they can be extended to socio-ecological studies that try to quantify value judgements by using questionnaires.

If a respondent knows the correct response but has an incentive to lie (maybe while assessing rule-breaking behaviour, for instance) then we can use a randomised response technique (RRT) to reduce the incentive to be dishonest. In a different scenario, however, respondents might have logical answers to a value-based question, when their actions are really determined by emotional, sub-conscious factors they are unaware of (like the tree-hugger who laments consumerism but stands in a queue for the newest iPhone, for example). Could we modify Burgman’s methods to identify these sub-conscious values?

Here is how it might work: (1) ask the respondent to quantify a value-based question, (2) the same respondent is then asked to quantify the same value but this time in the way they expect their peers will answer the question. I think that the answer of the second question could reflect a “projection bias” where someone projects their own unrecognised feelings onto others. This second response can then possibly be used to “anchor” the response to the first question. Using these two answers and the average responses of the full survey, it might be possible to disentangle the subjective biases of respondents from their objective thoughts.

I’m sure someone must have done this before… so excuse me if this is a old idea in the social sciences.

I’m sure I replied to this before.

Anyway, here goes again.

I can see sort of what you mean? Can you give me a concrete example where it could be used though? I think that might help me understand your point a little better.

I was also confused when I read my original comment again…

Anyway, I started typing a more detailed response and it quickly snowballed and got more complicated than I had first anticipated. So, I added a few links, a figure and a video clip and turned it into a post.

You can read it here:

http://solitaryecology.com/2013/03/30/novel-methods-to-correct-for-cognitive-bias-when-assessing-conservation-values/

Please don’t hesitate to point out if I am missing something glaringly obvious! This is not my field of expertise, so I am treading on the uncharted grounds of social-sciences.